Global Clothing Manufacturer and Retailer Amazon Redshift Re-Architecture | mLogica

Executive Summary

Latency = delay. Also referred to as a ping rate, it is the amount of delay (or time) it takes to send information from point to point. Latency is usually measured in milliseconds. In retail and e-commerce, it can represent the difference between success and failure.

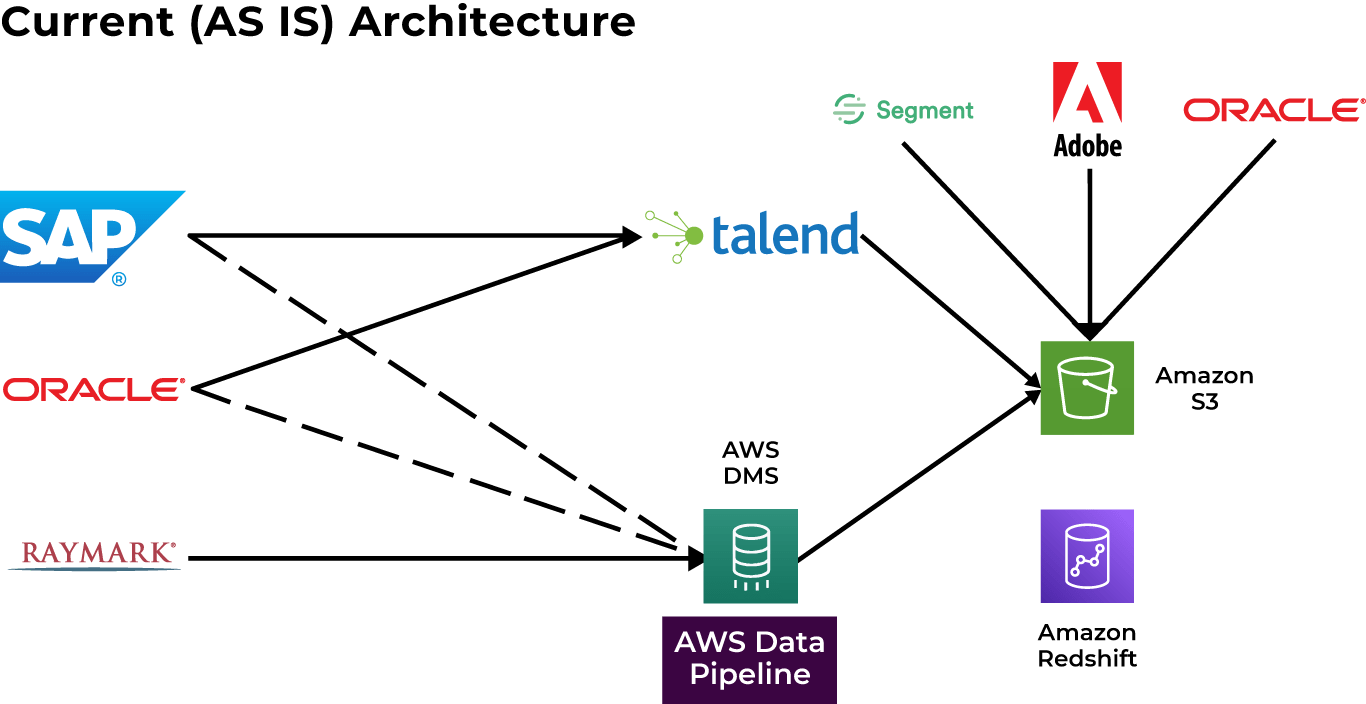

The Customer, an iconic American clothing manufacturer, was in the process of migrating databases from multiple legacy database vendors to Amazon Web Services (AWS).

As is common with migration efforts, the new AWS environment was experiencing slow response times leading to a loss in transactions. To put this in perspective, an outage on Black Friday would cost the Customer over $45,000 per/minute.

Customer Challenges

The Customer had created a Redshift Database Environment that facilitated collation of data from multiple sources across both a transactional and analytical platform. The purpose of the data warehouse was to maintain a repository of data on which can be analyzed, reported on, and used to make business decisions ahead of planned events or key sales periods. The data warehouse that was deployed had a number of functions that it needed to support in order to fulfill its purpose. Those functions included the ability to ingest large quantities of data from multiple transactional and analytical database platforms, such as Oracle and SQL Server, transform the ingested data into the correct format, and store it in the Redshift warehouse in a timely manner; all while providing Business Critical Reports to key personnel that would use the data to make key business decisions. The system as designed was not able to fulfil the basic functions outlined, as it was frequently crashing. In essence, the system lacked the predictability, stability, and scalability.

- The Customer’s Data analytics system was the primary analytics platform for customer data

- The Data analytics system was on Redshift and ran daily data pipelines to load and transform data in Redshift

- The majority of these Online Transactional Processing (OLTP) activities were run during the their business window

- The analytics team was dependent on this data for ad hoc analysis, Tableau dashboards, and other analytics processes

- Inefficiencies in the system meant that jobs were getting stuck

- mLogica identified locks blocking critical ELT jobs

- Manual processes

- Lack of resources

- Short window

The Customer wanted to bring in more data from internal data sources, as well as, third party data sources and open the analytics platform to more users globally.

The Solution

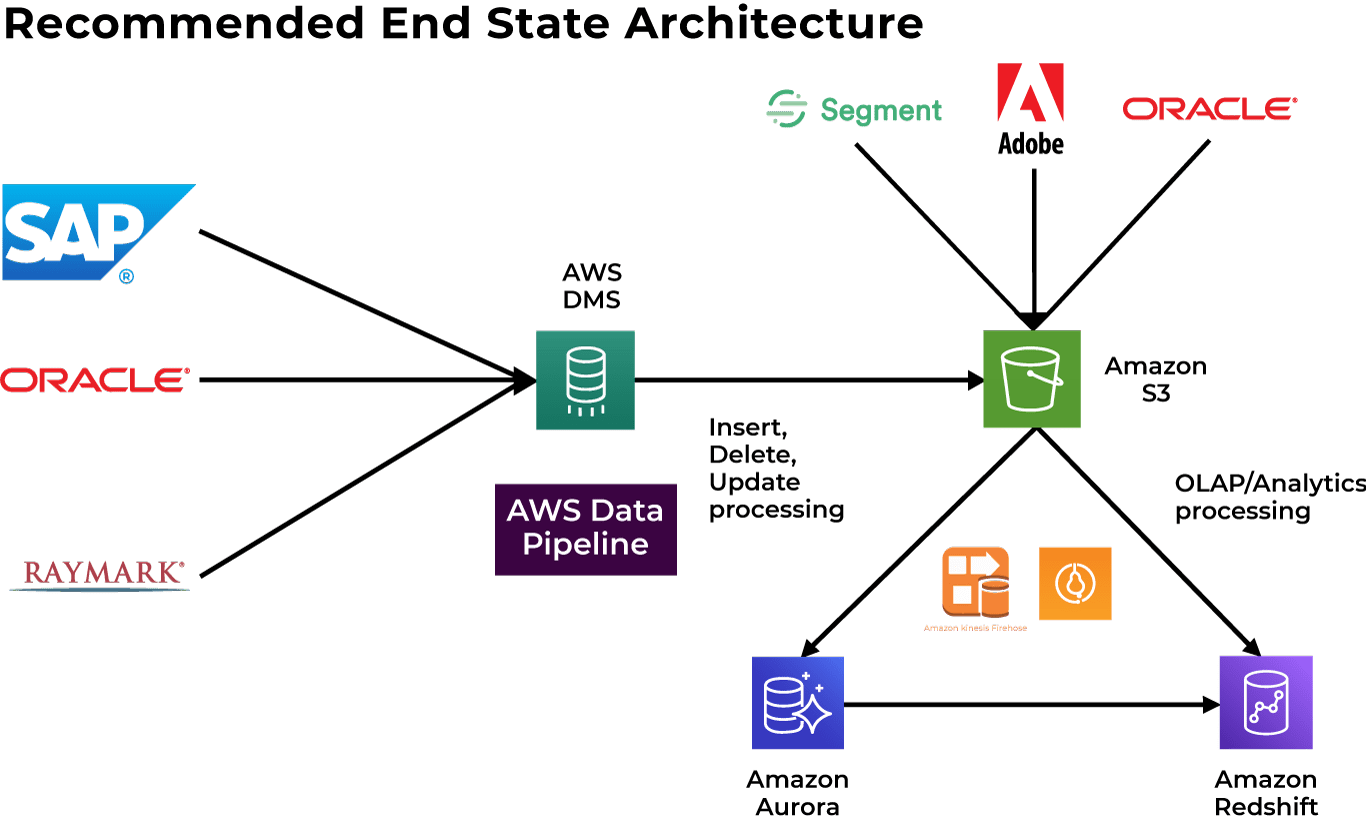

The mLogica team began with a detailed analysis of the environment to review the Redshift design and architecture as well as the key processes and tools used to enable the functionality required of the system and establish the baseline issues impacting the stability of the environment. After a thorough analysis, mLogica was able to determine that the original design forced key processes were in constant contention with each other thereby causing the system to become unstable and crash. mLogica recommended modification of the processes, new configuration settings for the tools which were being used to support the key functional requirements, and a new deployment architecture more suited to support the requirements of the system and to achieve the performance goals outlined early in the engagement. A resolution plan for the existing issues was proposed to the Customer with mLogica providing a best practices and recommendations document along with a conceptual architecture diagram to improve the end-to-end data pipeline performance including DMS and Redshift service optimization.

The Customer accepted our recommendations and the new configuration deployed which allowed achieved the following results

- Predictability: Processes finished on time with no contention

- Stability: System uptime targets were achieved

- Scalability: The ability to scale up to meet peak demands was accomplished

The Benefit

mLogica implemented the recommended end state architecture for separate workloads between Aurora and Redshift; including directing the insert, delete, and update processing to Aurora; performing transformational processing at the DMS level (ETL) or at Aurora level (ELT) and using Redshift only for analytics.

After the new architecture was implemented, mLogica directed the users to Redshift based on their use cases. Batch processing was also directed accordingly. This eliminated user and processing restrictions.

This optimization enabled the Customer to separate development/quality assurance from the production instance for both Redshift and Aurora as part of the proposed end-state architecture. Additionally, high availability/disaster recovery was implemented and CloudWatch utilized to monitor various aspects of the Redshift cluster. These best practices enabled the Customer to define data residency requirements, since the final deployment will be global; review the compliance risks and create mitigation plans; and plan for data security risks – data privacy and GDPR regulations.