Mlogica Thought Leaders: Is it Time to Replatform Your IBM Mainframe to AWS?

Anthony Veltri, mLogica Senior V.P. of Solution Management

There are many reasons organizations should be getting serious about modernizing their mainframes, including aging technical resources, getting rid of high MIPS costs, eliminating non-strategic platforms and gaining critical on-demand scalability, security and innovation. As one of the leading cloud providers, AWS, in conjunction with mLogica, offers a number of key advantages to private and public sector organizations looking to consolidate legacy workloads. These include access to AI, machine learning (ML), advanced analytic tooling and more.

However, the migration of mainframe environments, which typically host mission critical applications, is highly complex, requiring an in-depth assessment of the existing system followed by an end-to-end migration strategy with automation at its core. Why? Because automating the bulk of repetitive code conversion dramatically slashes timeframes and budgets—and virtually eliminates costly delays caused by human coding errors. A proven example is mLogica’ LIBER*M Mainframe Modernization Suite of automated software, which has been successfully employed globally to accelerate mainframe migrations to AWS cloud.

Migrating a mainframe environment to the AWS Cloud involves designing a target architecture that leverages AWS services to replicate or enhance the capabilities of your existing mainframe applications and processes. The specific architecture will depend on your organization's unique requirements, the nature of your applications and your modernization goals, both now and into the future.

Assessing Workloads and Defining Objectives: A Use Case

There are a host of key technical decisions involved in replatforming a mainframe workload to AWS. To illustrate a best-practice strategy, this blog will detail a use case that comprises a typical set of mainframe system workloads, such as:

- A mix of batch and online transactions

- Legacy languages such as COBOL, Assembler and JCL

- Database: IBM Db2for z/OS, storing 1 TB of data

- Transaction monitor: CICS

- Job scheduler: BMC Control-M

- Files used as interfaces with other systems and intermediate processing: 100 GB

In this use case our objective is to serve the workloads from AWS in a single region using native AWS services wherever possible, including EC2 for compute and Aurora for the database. These workloads should be functionally equivalent and performance should be equivalent or better. The replatforming should have a minimal impact on end users; ideally, they would not need to be aware of a transition.

The replatformed system should also meet critical non-functional requirements, in particular:

- The ability to tolerate or recover from service unavailability in a data center or loss of a facility due to a natural disaster

- Loss of a WAN connection due to maintenance or failure

- Cyberterrorism, including data corruption threat

Despite the general high reliability of AWS, it is crucial to plan for service interruptions isolated to a single availability zone. For this example, we will focus solely on high availability.

NOTE: In a future post, we will enhance this use case solution to address disaster recovery.

Customizing the Solution

In designing a solution to replatform these mainframe workloads to AWS, we will address three areas:

- The replatforming stack, i.e., software that will run in an AWS compute instance (EC2) that provides mainframe-equivalent transaction (e.g., CICS or IMS DC), batch and security services

- Sizing the AWS compute and data services required to achieve the required performance

- Designing a deployment architecture that provides high availability, i.e., one that will tolerate temporary service interruptions in an AWS availability zone

The LIBER*Z Replatforming Stack

For most workloads, mLogica’s automated LIBER*Z replatforming stack, a module of our LIBER*M Mainframe Modernization Suite, meets all requirements and offers excellent value.

LIBER*Z provides mainframe-equivalent transaction and batch frameworks and further integrates with an enterprise LDAP server like the AWS Active Directory service to provide federated security. With the support of other LIBER*M refactoring tools, LIBER*Z also has several other key features that distinguish it, including:

- Support for migrating VSAM files to relational database tables, so the data can be easily integrated into the AWS data analytics ecosystem

- Automated refactoring of Db2 z/OS to Postgres Aurora

- Automated refactoring of Assembler to COBOL for language consolidation and to run efficiently in the distributed environment

- Support for C language

- Flexible targets for JCL: LIBER*Z supports either keeping migrated JCL intact or converting it to Linux CSH, Perl or Windows shell

- Compiler independence: LIBER*Z is compatible with several x86 COBOL compilers, so projects can use the optimal compiler and development environment for their workloads

- LIBER*Z implements a true transaction monitor architecture for both CICS and IMS DC online workloads, resulting in better compatibility, scalability and performance. This architecture also facilitates regression testing by offering rich CICS tracing

- LIBER*Z includes key utilities like ZSORT, which replaces mainframe DFSORT, and offers a low-cost job scheduling tool to replace expensive mainframe schedulers

AWS Sizing

To inform the architecture, we determine the workload requirements for compute, memory, storage and database. For compute, a general rule is that for every 50 MIPS a workload consumes on an IBM z/OS mainframe, the replatformed workload will require 1 vCPU on AWS. This offers a starting point for narrowing the choice of an EC2 instance type.

However, since most storage on AWS is network-attached, including both EBS and EFS, the network interfaces on EC2 instances need to have adequate bandwidth to support the I/O load. For a discussion of the advantages of different storage services in a mainframe modernization context, see this related AWS blog, as well as AWS documentation that presents the IOPS potential of different instance types.

Memory

For memory, a good starting point is 4GiB of RAM per vCPU, the standard ratio in m-class instance type, to be adjusted based on testing with the actual workload. If testing shows the workloads only use 2 GiB per vCPU, AWS makes it easy to change instance types, which also optimizes cost.

Storage

Storage requirements depend on the system’s use of files on mainframe DASD for interfaces and intermediate processing. A comprehensive assessment of the existing system is required to make this calculation—in this use case, one that takes into account that VSAM in particular will move to relational tables. Also, in this use case we are assuming the workloads require a maximum of 100 GB of file storage.

Relational Database Options on AWS

Migrating to AWS offers multiple relational database alternatives. In business cases where there is a requirement to align with a commercial database, AWS provides options for both Oracle and MS SQL Server.

In the absence of such requirements, Amazon Aurora for PostgreSQL is a popular relational database choice. PostgreSQL offers solid power and performance, and the Aurora service handles the heavy lifting of database administration, patches, backups, replication and upgrades reliably and with less effort than other options.

Since AWS Aurora spans availability zones within a region, it’s also well suited to meet the high availability requirement of this use case. Aurora will allow the solution to have a primary instance in one availability zone and a read replica in another to ensure fulfillment of the high availability mandate. In the event of a failover, the read replica will be automatically promoted to the primary instance.

In terms of sizing, AWS Aurora Serverless expands and contracts processing and storage automatically based on demand within a defined range of Aurora capacity units (ACUs), with testing to indicate the appropriate production range. In this use case, as a starting point we will provision 1 TB of Aurora storage and a range of 4-32 ACUs of processing power.

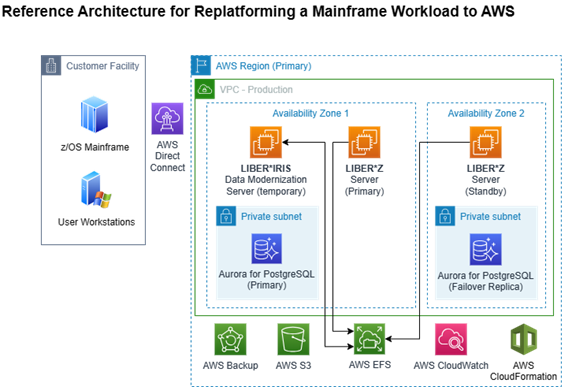

Deployment Architecture

Having established these building blocks, we can now lay out a hypothetical architecture and estimate the AWS costs. For this exercise, we will focus on the production environment only, however in most cases a system will also require at least two additional non-production instances for development and testing. The mLogica reference architecture for this approach relies on EC2 instances sized for the workload, an Aurora Serverless multi-availability zone cluster and EFS storage shared across availability zones.

For compute, the best starting point at the time of writing is a primary EC2 instance of type m7i.12xlarge in one availability zone (AZ) of the chosen AWS region, with an identical instance in a second AZ to meet the high availability requirement. This instance type provides 48 vCPUs, or roughly 1 vCPU per 50 MIPS (equivalent to one physical core per 100 MIPS) and 4 GiB RAM per vCPU, which is aligned with the LIBER*Z starting point benchmarks.

The mLogica migration team may recommend a change to the instance after testing with the actual workloads. Each instance is configured with minimal EBS storage for the Linux operating system, LIBER*Z and the deployed code.

Ensuring High Availability

To guarantee high availability, we will configure AWS CloudWatch to instantaneously detect any loss of availability of the primary instance. This would immediately initiate failover by promoting the Aurora replica in the failover availability zone to be the primary read/write database, redirecting traffic to the failover EC2 instance using AWS Route 53.

Key Additional AWS Services

- For optimal connectivity from the customer site to the AWS Region, we recommend provisioning an AWS Direct Connect WAN connection. This should provide at least as much bandwidth as the peak consumed by the legacy mainframe.

- To protect against threats that may corrupt data, like software defects, user error or malicious attacks, we leverage AWS Backup to keep periodic backups of the EBS, EFS and Aurora content.

- In alignment with best practices, the team will use CloudFormation to design and deploy the architecture. This will enable the customer team to manage the deployment architecture itself as well as code and deploy changes using DevOps processes.

Data Modernization Plan

The architecture also includes a server for mLogica’s LIBER*IRIS Mainframe Data Modernization Suite, which will be used to migrate mainframe data to AWS Aurora and EFS. LIBER*IRIS generates extraction and transfer scripts on the mainframe to upload the data to EFS, as well as scripts on EC2 to load the data from EFS into Aurora.

A key feature of this approach is that the mLogica team will never require access to production data, as the scripts generated can be run by the customer. The LIBER*IRIS EC2 instance will be deprovisioned once the replatformed system is in production.

NOTE: In a future post, we will provide the details of this data migration process.

Conclusion

This reference approach to replatforming a mainframe workload to AWS provides a baseline that is easily augmented to meet any additional client requirements. In an upcoming blog we’ll examine how utilizing DevOps best practices accelerates mainframe modernization projects while mitigating risk.

Migrating mainframe infrastructure to the cloud requires expertise, top-flight automation software and proven methodologies. Engaging with mLogica’s AWS cloud migration and automation specialists can help ensure a seamless transition that quickly maximizes the benefits of migration for accelerated ROI.

Want to learn more? Contact us